mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-19 11:45:10 +08:00

revert white-space changes in docs (#12557)

### What problem does this PR solve?

Trailing white-spaces in commit 6814ace1aa

got automatically trimmed by code editor may causes documentation

typesetting broken.

Mostly for double spaces for soft line breaks.

### Type of change

- [x] Documentation Update

This commit is contained in:

@ -86,22 +86,22 @@ They are highly consistent at the technical base (e.g., vector retrieval, keywor

|

||||

|

||||

RAG has demonstrated clear value in several typical scenarios:

|

||||

|

||||

1. Enterprise Knowledge Q&A and Internal Search

|

||||

1. Enterprise Knowledge Q&A and Internal Search

|

||||

By vectorizing corporate private data and combining it with an LLM, RAG can directly return natural language answers based on authoritative sources, rather than document lists. While meeting intelligent Q&A needs, it inherently aligns with corporate requirements for data security, access control, and compliance.

|

||||

2. Complex Document Understanding and Professional Q&A

|

||||

2. Complex Document Understanding and Professional Q&A

|

||||

For structurally complex documents like contracts and regulations, the value of RAG lies in its ability to generate accurate, verifiable answers while maintaining context integrity. Its system accuracy largely depends on text chunking and semantic understanding strategies.

|

||||

3. Dynamic Knowledge Fusion and Decision Support

|

||||

3. Dynamic Knowledge Fusion and Decision Support

|

||||

In business scenarios requiring the synthesis of information from multiple sources, RAG evolves into a knowledge orchestration and reasoning support system for business decisions. Through a multi-path recall mechanism, it fuses knowledge from different systems and formats, maintaining factual consistency and logical controllability during the generation phase.

|

||||

|

||||

## The future of RAG

|

||||

|

||||

The evolution of RAG is unfolding along several clear paths:

|

||||

|

||||

1. RAG as the data foundation for Agents

|

||||

1. RAG as the data foundation for Agents

|

||||

RAG and agents have an architecture vs. scenario relationship. For agents to achieve autonomous and reliable decision-making and execution, they must rely on accurate and timely knowledge. RAG provides them with a standardized capability to access private domain knowledge and is an inevitable choice for building knowledge-aware agents.

|

||||

2. Advanced RAG: Using LLMs to optimize retrieval itself

|

||||

2. Advanced RAG: Using LLMs to optimize retrieval itself

|

||||

The core feature of next-generation RAG is fully utilizing the reasoning capabilities of LLMs to optimize the retrieval process, such as rewriting queries, summarizing or fusing results, or implementing intelligent routing. Empowering every aspect of retrieval with LLMs is key to breaking through current performance bottlenecks.

|

||||

3. Towards context engineering 2.0

|

||||

3. Towards context engineering 2.0

|

||||

Current RAG can be viewed as Context Engineering 1.0, whose core is assembling static knowledge context for single Q&A tasks. The forthcoming Context Engineering 2.0 will extend with RAG technology at its core, becoming a system that automatically and dynamically assembles comprehensive context for agents. The context fused by this system will come not only from documents but also include interaction memory, available tools/skills, and real-time environmental information. This marks the transition of agent development from a "handicraft workshop" model to the industrial starting point of automated context engineering.

|

||||

|

||||

The essence of RAG is to build a dedicated, efficient, and trustworthy external data interface for large language models; its core is Retrieval, not Generation. Starting from the practical need to solve private data access, its technical depth is reflected in the optimization of retrieval for complex unstructured data. With its deep integration into agent architectures and its development towards automated context engineering, RAG is evolving from a technology that improves Q&A quality into the core infrastructure for building the next generation of trustworthy, controllable, and scalable intelligent applications.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

sidebarIcon: LucideCog

|

||||

}

|

||||

---

|

||||

|

||||

# Configuration

|

||||

|

||||

Configurations for deploying RAGFlow via Docker.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideBookA

|

||||

}

|

||||

---

|

||||

|

||||

# Contribution guidelines

|

||||

|

||||

General guidelines for RAGFlow's community contributors.

|

||||

@ -35,7 +34,7 @@ The list below mentions some contributions you can make, but it is not a complet

|

||||

1. Fork our GitHub repository.

|

||||

2. Clone your fork to your local machine:

|

||||

`git clone git@github.com:<yourname>/ragflow.git`

|

||||

3. Create a local branch:

|

||||

3. Create a local branch:

|

||||

`git checkout -b my-branch`

|

||||

4. Provide sufficient information in your commit message

|

||||

`git commit -m 'Provide sufficient info in your commit message'`

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideKey

|

||||

}

|

||||

---

|

||||

|

||||

# Acquire RAGFlow API key

|

||||

|

||||

An API key is required for the RAGFlow server to authenticate your HTTP/Python or MCP requests. This documents provides instructions on obtaining a RAGFlow API key.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucidePackage

|

||||

}

|

||||

---

|

||||

|

||||

# Build RAGFlow Docker image

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideMonitorPlay

|

||||

}

|

||||

---

|

||||

|

||||

# Launch service from source

|

||||

|

||||

A guide explaining how to set up a RAGFlow service from its source code. By following this guide, you'll be able to debug using the source code.

|

||||

@ -39,7 +38,7 @@ cd ragflow/

|

||||

### Install Python dependencies

|

||||

|

||||

1. Install uv:

|

||||

|

||||

|

||||

```bash

|

||||

pipx install uv

|

||||

```

|

||||

@ -91,13 +90,13 @@ docker compose -f docker/docker-compose-base.yml up -d

|

||||

```

|

||||

|

||||

3. **Optional:** If you cannot access HuggingFace, set the HF_ENDPOINT environment variable to use a mirror site:

|

||||

|

||||

|

||||

```bash

|

||||

export HF_ENDPOINT=https://hf-mirror.com

|

||||

```

|

||||

|

||||

4. Check the configuration in **conf/service_conf.yaml**, ensuring all hosts and ports are correctly set.

|

||||

|

||||

|

||||

5. Run the **entrypoint.sh** script to launch the backend service:

|

||||

|

||||

```shell

|

||||

@ -126,10 +125,10 @@ docker compose -f docker/docker-compose-base.yml up -d

|

||||

3. Start up the RAGFlow frontend service:

|

||||

|

||||

```bash

|

||||

npm run dev

|

||||

npm run dev

|

||||

```

|

||||

|

||||

*The following message appears, showing the IP address and port number of your frontend service:*

|

||||

*The following message appears, showing the IP address and port number of your frontend service:*

|

||||

|

||||

|

||||

|

||||

|

||||

@ -5,20 +5,19 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideTvMinimalPlay

|

||||

}

|

||||

---

|

||||

|

||||

# Launch RAGFlow MCP server

|

||||

|

||||

Launch an MCP server from source or via Docker.

|

||||

|

||||

---

|

||||

|

||||

A RAGFlow Model Context Protocol (MCP) server is designed as an independent component to complement the RAGFlow server. Note that an MCP server must operate alongside a properly functioning RAGFlow server.

|

||||

A RAGFlow Model Context Protocol (MCP) server is designed as an independent component to complement the RAGFlow server. Note that an MCP server must operate alongside a properly functioning RAGFlow server.

|

||||

|

||||

An MCP server can start up in either self-host mode (default) or host mode:

|

||||

An MCP server can start up in either self-host mode (default) or host mode:

|

||||

|

||||

- **Self-host mode**:

|

||||

- **Self-host mode**:

|

||||

When launching an MCP server in self-host mode, you must provide an API key to authenticate the MCP server with the RAGFlow server. In this mode, the MCP server can access *only* the datasets of a specified tenant on the RAGFlow server.

|

||||

- **Host mode**:

|

||||

- **Host mode**:

|

||||

In host mode, each MCP client can access their own datasets on the RAGFlow server. However, each client request must include a valid API key to authenticate the client with the RAGFlow server.

|

||||

|

||||

Once a connection is established, an MCP server communicates with its client in MCP HTTP+SSE (Server-Sent Events) mode, unidirectionally pushing responses from the RAGFlow server to its client in real time.

|

||||

@ -32,9 +31,9 @@ Once a connection is established, an MCP server communicates with its client in

|

||||

If you wish to try out our MCP server without upgrading RAGFlow, community contributor [yiminghub2024](https://github.com/yiminghub2024) 👏 shares their recommended steps [here](#launch-an-mcp-server-without-upgrading-ragflow).

|

||||

:::

|

||||

|

||||

## Launch an MCP server

|

||||

## Launch an MCP server

|

||||

|

||||

You can start an MCP server either from source code or via Docker.

|

||||

You can start an MCP server either from source code or via Docker.

|

||||

|

||||

### Launch from source code

|

||||

|

||||

@ -51,7 +50,7 @@ uv run mcp/server/server.py --host=127.0.0.1 --port=9382 --base-url=http://127.0

|

||||

# uv run mcp/server/server.py --host=127.0.0.1 --port=9382 --base-url=http://127.0.0.1:9380 --mode=host

|

||||

```

|

||||

|

||||

Where:

|

||||

Where:

|

||||

|

||||

- `host`: The MCP server's host address.

|

||||

- `port`: The MCP server's listening port.

|

||||

@ -97,7 +96,7 @@ The MCP server is designed as an optional component that complements the RAGFlow

|

||||

# - --no-json-response # Disables JSON responses for the streamable-HTTP transport

|

||||

```

|

||||

|

||||

Where:

|

||||

Where:

|

||||

|

||||

- `mcp-host`: The MCP server's host address.

|

||||

- `mcp-port`: The MCP server's listening port.

|

||||

@ -122,13 +121,13 @@ Run `docker compose -f docker-compose.yml up` to launch the RAGFlow server toget

|

||||

docker-ragflow-cpu-1 | Starting MCP Server on 0.0.0.0:9382 with base URL http://127.0.0.1:9380...

|

||||

docker-ragflow-cpu-1 | Starting 1 task executor(s) on host 'dd0b5e07e76f'...

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:18,816 INFO 27 ragflow_server log path: /ragflow/logs/ragflow_server.log, log levels: {'peewee': 'WARNING', 'pdfminer': 'WARNING', 'root': 'INFO'}

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | __ __ ____ ____ ____ _____ ______ _______ ____

|

||||

docker-ragflow-cpu-1 | | \/ |/ ___| _ \ / ___|| ____| _ \ \ / / ____| _ \

|

||||

docker-ragflow-cpu-1 | | |\/| | | | |_) | \___ \| _| | |_) \ \ / /| _| | |_) |

|

||||

docker-ragflow-cpu-1 | | | | | |___| __/ ___) | |___| _ < \ V / | |___| _ <

|

||||

docker-ragflow-cpu-1 | |_| |_|\____|_| |____/|_____|_| \_\ \_/ |_____|_| \_\

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | MCP launch mode: self-host

|

||||

docker-ragflow-cpu-1 | MCP host: 0.0.0.0

|

||||

docker-ragflow-cpu-1 | MCP port: 9382

|

||||

@ -141,13 +140,13 @@ Run `docker compose -f docker-compose.yml up` to launch the RAGFlow server toget

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:23,263 INFO 27 init database on cluster mode successfully

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:25,318 INFO 27 load_model /ragflow/rag/res/deepdoc/det.onnx uses CPU

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:25,367 INFO 27 load_model /ragflow/rag/res/deepdoc/rec.onnx uses CPU

|

||||

docker-ragflow-cpu-1 | ____ ___ ______ ______ __

|

||||

docker-ragflow-cpu-1 | ____ ___ ______ ______ __

|

||||

docker-ragflow-cpu-1 | / __ \ / | / ____// ____// /____ _ __

|

||||

docker-ragflow-cpu-1 | / /_/ // /| | / / __ / /_ / // __ \| | /| / /

|

||||

docker-ragflow-cpu-1 | / _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

docker-ragflow-cpu-1 | /_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | / _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

docker-ragflow-cpu-1 | /_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:29,088 INFO 27 RAGFlow version: v0.18.0-285-gb2c299fa full

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:29,088 INFO 27 project base: /ragflow

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:29,088 INFO 27 Current configs, from /ragflow/conf/service_conf.yaml:

|

||||

@ -156,12 +155,12 @@ Run `docker compose -f docker-compose.yml up` to launch the RAGFlow server toget

|

||||

docker-ragflow-cpu-1 | * Running on all addresses (0.0.0.0)

|

||||

docker-ragflow-cpu-1 | * Running on http://127.0.0.1:9380

|

||||

docker-ragflow-cpu-1 | * Running on http://172.19.0.6:9380

|

||||

docker-ragflow-cpu-1 | ______ __ ______ __

|

||||

docker-ragflow-cpu-1 | ______ __ ______ __

|

||||

docker-ragflow-cpu-1 | /_ __/___ ______/ /__ / ____/ _____ _______ __/ /_____ _____

|

||||

docker-ragflow-cpu-1 | / / / __ `/ ___/ //_/ / __/ | |/_/ _ \/ ___/ / / / __/ __ \/ ___/

|

||||

docker-ragflow-cpu-1 | / / / /_/ (__ ) ,< / /____> </ __/ /__/ /_/ / /_/ /_/ / /

|

||||

docker-ragflow-cpu-1 | /_/ \__,_/____/_/|_| /_____/_/|_|\___/\___/\__,_/\__/\____/_/

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | / / / /_/ (__ ) ,< / /____> </ __/ /__/ /_/ / /_/ /_/ / /

|

||||

docker-ragflow-cpu-1 | /_/ \__,_/____/_/|_| /_____/_/|_|\___/\___/\__,_/\__/\____/_/

|

||||

docker-ragflow-cpu-1 |

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:34,501 INFO 32 TaskExecutor: RAGFlow version: v0.18.0-285-gb2c299fa full

|

||||

docker-ragflow-cpu-1 | 2025-04-18 15:41:34,501 INFO 32 Use Elasticsearch http://es01:9200 as the doc engine.

|

||||

...

|

||||

@ -173,11 +172,11 @@ Run `docker compose -f docker-compose.yml up` to launch the RAGFlow server toget

|

||||

This section is contributed by our community contributor [yiminghub2024](https://github.com/yiminghub2024). 👏

|

||||

:::

|

||||

|

||||

1. Prepare all MCP-specific files and directories.

|

||||

i. Copy the [mcp/](https://github.com/infiniflow/ragflow/tree/main/mcp) directory to your local working directory.

|

||||

ii. Copy [docker/docker-compose.yml](https://github.com/infiniflow/ragflow/blob/main/docker/docker-compose.yml) locally.

|

||||

iii. Copy [docker/entrypoint.sh](https://github.com/infiniflow/ragflow/blob/main/docker/entrypoint.sh) locally.

|

||||

iv. Install the required dependencies using `uv`:

|

||||

1. Prepare all MCP-specific files and directories.

|

||||

i. Copy the [mcp/](https://github.com/infiniflow/ragflow/tree/main/mcp) directory to your local working directory.

|

||||

ii. Copy [docker/docker-compose.yml](https://github.com/infiniflow/ragflow/blob/main/docker/docker-compose.yml) locally.

|

||||

iii. Copy [docker/entrypoint.sh](https://github.com/infiniflow/ragflow/blob/main/docker/entrypoint.sh) locally.

|

||||

iv. Install the required dependencies using `uv`:

|

||||

- Run `uv add mcp` or

|

||||

- Copy [pyproject.toml](https://github.com/infiniflow/ragflow/blob/main/pyproject.toml) locally and run `uv sync --python 3.12`.

|

||||

2. Edit **docker-compose.yml** to enable MCP (disabled by default).

|

||||

@ -197,7 +196,7 @@ docker logs docker-ragflow-cpu-1

|

||||

|

||||

## Security considerations

|

||||

|

||||

As MCP technology is still at early stage and no official best practices for authentication or authorization have been established, RAGFlow currently uses [API key](./acquire_ragflow_api_key.md) to validate identity for the operations described earlier. However, in public environments, this makeshift solution could expose your MCP server to potential network attacks. Therefore, when running a local SSE server, it is recommended to bind only to localhost (`127.0.0.1`) rather than to all interfaces (`0.0.0.0`).

|

||||

As MCP technology is still at early stage and no official best practices for authentication or authorization have been established, RAGFlow currently uses [API key](./acquire_ragflow_api_key.md) to validate identity for the operations described earlier. However, in public environments, this makeshift solution could expose your MCP server to potential network attacks. Therefore, when running a local SSE server, it is recommended to bind only to localhost (`127.0.0.1`) rather than to all interfaces (`0.0.0.0`).

|

||||

|

||||

For further guidance, see the [official MCP documentation](https://modelcontextprotocol.io/docs/concepts/transports#security-considerations).

|

||||

|

||||

@ -205,11 +204,11 @@ For further guidance, see the [official MCP documentation](https://modelcontextp

|

||||

|

||||

### When to use an API key for authentication?

|

||||

|

||||

The use of an API key depends on the operating mode of your MCP server.

|

||||

The use of an API key depends on the operating mode of your MCP server.

|

||||

|

||||

- **Self-host mode** (default):

|

||||

When starting the MCP server in self-host mode, you should provide an API key when launching it to authenticate it with the RAGFlow server:

|

||||

- If launching from source, include the API key in the command.

|

||||

- **Self-host mode** (default):

|

||||

When starting the MCP server in self-host mode, you should provide an API key when launching it to authenticate it with the RAGFlow server:

|

||||

- If launching from source, include the API key in the command.

|

||||

- If launching from Docker, update the API key in **docker/docker-compose.yml**.

|

||||

- **Host mode**:

|

||||

- **Host mode**:

|

||||

If your RAGFlow MCP server is working in host mode, include the API key in the `headers` of your client requests to authenticate your client with the RAGFlow server. An example is available [here](https://github.com/infiniflow/ragflow/blob/main/mcp/client/client.py).

|

||||

|

||||

@ -6,7 +6,6 @@ sidebar_custom_props: {

|

||||

}

|

||||

|

||||

---

|

||||

|

||||

# RAGFlow MCP client examples

|

||||

|

||||

Python and curl MCP client examples.

|

||||

@ -39,11 +38,11 @@ When interacting with the MCP server via HTTP requests, follow this initializati

|

||||

|

||||

1. **The client sends an `initialize` request** with protocol version and capabilities.

|

||||

2. **The server replies with an `initialize` response**, including the supported protocol and capabilities.

|

||||

3. **The client confirms readiness with an `initialized` notification**.

|

||||

3. **The client confirms readiness with an `initialized` notification**.

|

||||

_The connection is established between the client and the server, and further operations (such as tool listing) may proceed._

|

||||

|

||||

:::tip NOTE

|

||||

For more information about this initialization process, see [here](https://modelcontextprotocol.io/docs/concepts/architecture#1-initialization).

|

||||

For more information about this initialization process, see [here](https://modelcontextprotocol.io/docs/concepts/architecture#1-initialization).

|

||||

:::

|

||||

|

||||

In the following sections, we will walk you through a complete tool calling process.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideToolCase

|

||||

}

|

||||

---

|

||||

|

||||

# RAGFlow MCP tools

|

||||

|

||||

The MCP server currently offers a specialized tool to assist users in searching for relevant information powered by RAGFlow DeepDoc technology:

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideShuffle

|

||||

}

|

||||

---

|

||||

|

||||

# Switch document engine

|

||||

|

||||

Switch your doc engine from Elasticsearch to Infinity.

|

||||

|

||||

27

docs/faq.mdx

27

docs/faq.mdx

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

sidebarIcon: LucideCircleQuestionMark

|

||||

}

|

||||

---

|

||||

|

||||

# FAQs

|

||||

|

||||

Answers to questions about general features, troubleshooting, usage, and more.

|

||||

@ -44,11 +43,11 @@ You can find the RAGFlow version number on the **System** page of the UI:

|

||||

If you build RAGFlow from source, the version number is also in the system log:

|

||||

|

||||

```

|

||||

____ ___ ______ ______ __

|

||||

____ ___ ______ ______ __

|

||||

/ __ \ / | / ____// ____// /____ _ __

|

||||

/ /_/ // /| | / / __ / /_ / // __ \| | /| / /

|

||||

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

|

||||

2025-02-18 10:10:43,835 INFO 1445658 RAGFlow version: v0.15.0-50-g6daae7f2

|

||||

```

|

||||

@ -178,7 +177,7 @@ To fix this issue, use https://hf-mirror.com instead:

|

||||

3. Start up the server:

|

||||

|

||||

```bash

|

||||

docker compose up -d

|

||||

docker compose up -d

|

||||

```

|

||||

|

||||

---

|

||||

@ -211,11 +210,11 @@ You will not log in to RAGFlow unless the server is fully initialized. Run `dock

|

||||

*The server is successfully initialized, if your system displays the following:*

|

||||

|

||||

```

|

||||

____ ___ ______ ______ __

|

||||

____ ___ ______ ______ __

|

||||

/ __ \ / | / ____// ____// /____ _ __

|

||||

/ /_/ // /| | / / __ / /_ / // __ \| | /| / /

|

||||

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

|

||||

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

|

||||

|

||||

* Running on all addresses (0.0.0.0)

|

||||

* Running on http://127.0.0.1:9380

|

||||

@ -318,7 +317,7 @@ The status of a Docker container status does not necessarily reflect the status

|

||||

$ docker ps

|

||||

```

|

||||

|

||||

*The status of a healthy Elasticsearch component should look as follows:*

|

||||

*The status of a healthy Elasticsearch component should look as follows:*

|

||||

|

||||

```

|

||||

91220e3285dd docker.elastic.co/elasticsearch/elasticsearch:8.11.3 "/bin/tini -- /usr/l…" 11 hours ago Up 11 hours (healthy) 9300/tcp, 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp ragflow-es-01

|

||||

@ -371,7 +370,7 @@ Yes, we do. See the Python files under the **rag/app** folder.

|

||||

$ docker ps

|

||||

```

|

||||

|

||||

*The status of a healthy Elasticsearch component should look as follows:*

|

||||

*The status of a healthy Elasticsearch component should look as follows:*

|

||||

|

||||

```bash

|

||||

cd29bcb254bc quay.io/minio/minio:RELEASE.2023-12-20T01-00-02Z "/usr/bin/docker-ent…" 2 weeks ago Up 11 hours 0.0.0.0:9001->9001/tcp, :::9001->9001/tcp, 0.0.0.0:9000->9000/tcp, :::9000->9000/tcp ragflow-minio

|

||||

@ -454,7 +453,7 @@ See [Upgrade RAGFlow](./guides/upgrade_ragflow.mdx) for more information.

|

||||

|

||||

To switch your document engine from Elasticsearch to [Infinity](https://github.com/infiniflow/infinity):

|

||||

|

||||

1. Stop all running containers:

|

||||

1. Stop all running containers:

|

||||

|

||||

```bash

|

||||

$ docker compose -f docker/docker-compose.yml down -v

|

||||

@ -464,7 +463,7 @@ To switch your document engine from Elasticsearch to [Infinity](https://github.c

|

||||

:::

|

||||

|

||||

2. In **docker/.env**, set `DOC_ENGINE=${DOC_ENGINE:-infinity}`

|

||||

3. Restart your Docker image:

|

||||

3. Restart your Docker image:

|

||||

|

||||

```bash

|

||||

$ docker compose -f docker-compose.yml up -d

|

||||

@ -509,12 +508,12 @@ From v0.22.0 onwards, RAGFlow includes MinerU (≥ 2.6.3) as an optional PDF pa

|

||||

- `"vlm-mlx-engine"`

|

||||

- `"vlm-vllm-async-engine"`

|

||||

- `"vlm-lmdeploy-engine"`.

|

||||

- `MINERU_SERVER_URL`: (optional) The downstream vLLM HTTP server (e.g., `http://vllm-host:30000`). Applicable when `MINERU_BACKEND` is set to `"vlm-http-client"`.

|

||||

- `MINERU_SERVER_URL`: (optional) The downstream vLLM HTTP server (e.g., `http://vllm-host:30000`). Applicable when `MINERU_BACKEND` is set to `"vlm-http-client"`.

|

||||

- `MINERU_OUTPUT_DIR`: (optional) The local directory for holding the outputs of the MinerU API service (zip/JSON) before ingestion.

|

||||

- `MINERU_DELETE_OUTPUT`: Whether to delete temporary output when a temporary directory is used:

|

||||

- `1`: Delete.

|

||||

- `0`: Retain.

|

||||

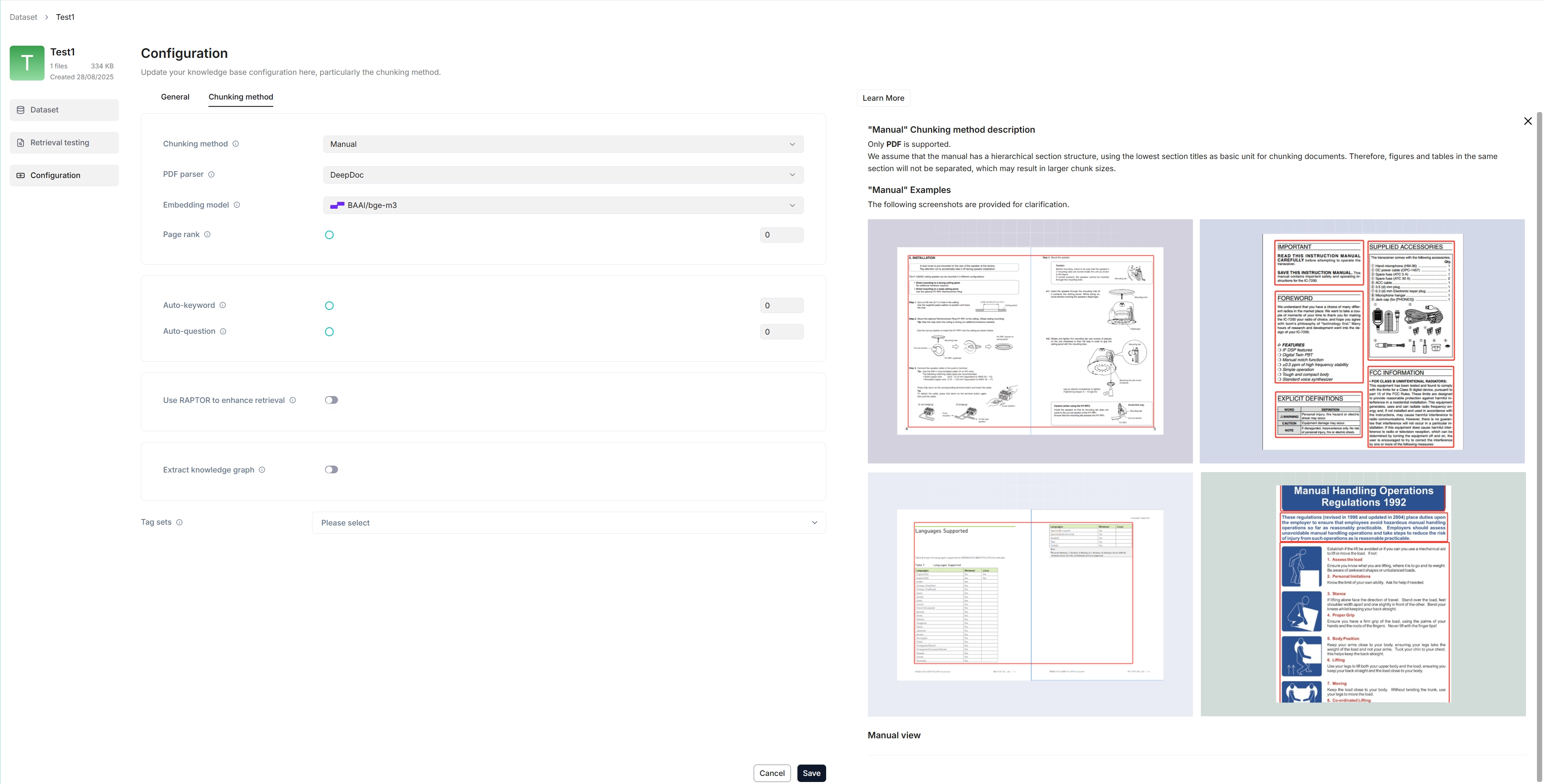

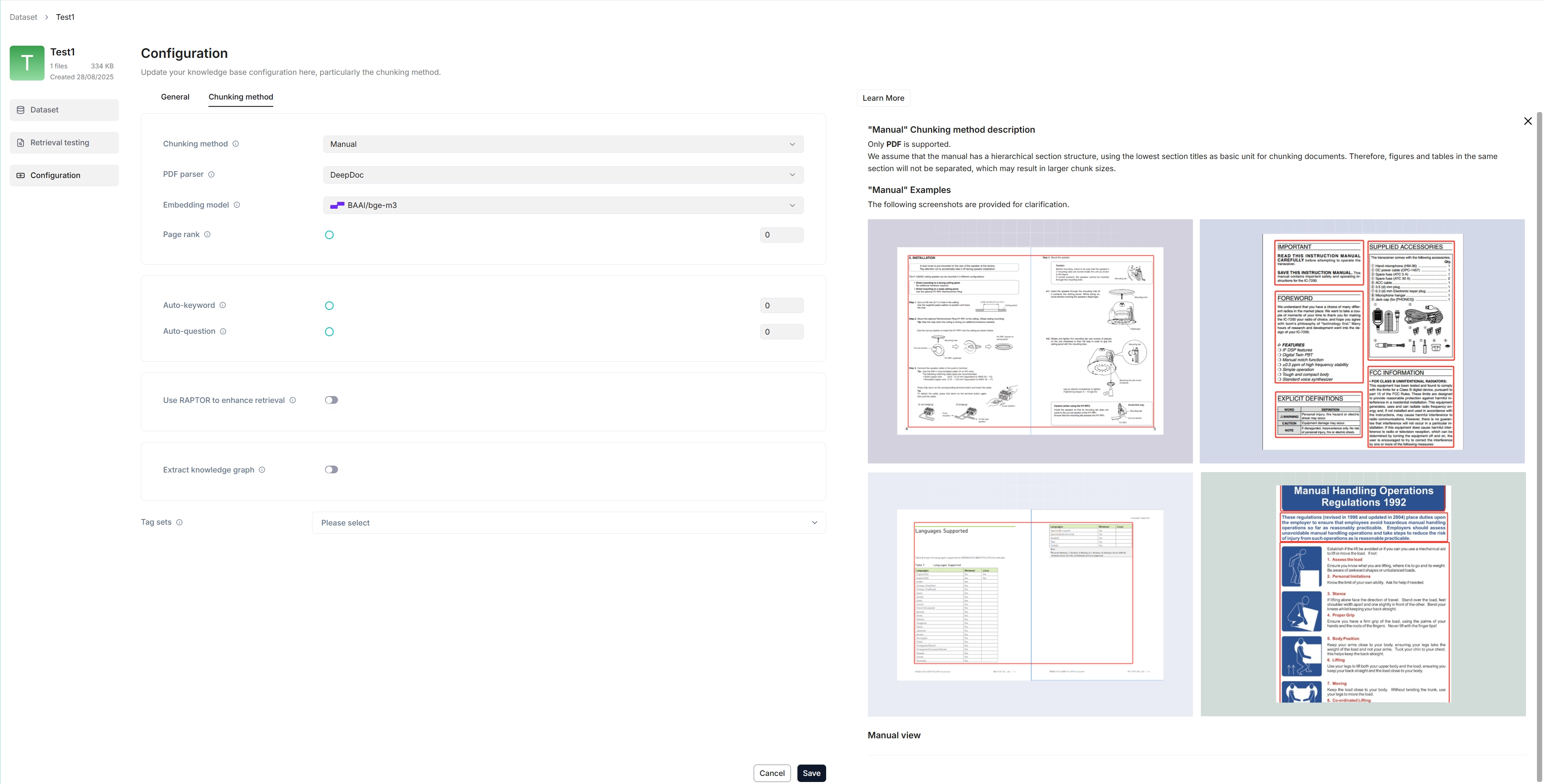

3. In the web UI, navigate to your dataset's **Configuration** page and find the **Ingestion pipeline** section:

|

||||

3. In the web UI, navigate to your dataset's **Configuration** page and find the **Ingestion pipeline** section:

|

||||

- If you decide to use a chunking method from the **Built-in** dropdown, ensure it supports PDF parsing, then select **MinerU** from the **PDF parser** dropdown.

|

||||

- If you use a custom ingestion pipeline instead, select **MinerU** in the **PDF parser** section of the **Parser** component.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideSquareTerminal

|

||||

}

|

||||

---

|

||||

|

||||

# Admin CLI

|

||||

|

||||

The RAGFlow Admin CLI is a command-line-based system administration tool that offers administrators an efficient and flexible method for system interaction and control. Operating on a client-server architecture, it communicates in real-time with the Admin Service, receiving administrator commands and dynamically returning execution results.

|

||||

@ -30,9 +29,9 @@ The RAGFlow Admin CLI is a command-line-based system administration tool that of

|

||||

The default password is admin.

|

||||

|

||||

**Parameters:**

|

||||

|

||||

|

||||

- -h: RAGFlow admin server host address

|

||||

|

||||

|

||||

- -p: RAGFlow admin server port

|

||||

|

||||

## Default administrative account

|

||||

|

||||

@ -5,8 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideActivity

|

||||

}

|

||||

---

|

||||

|

||||

|

||||

# Admin Service

|

||||

|

||||

The Admin Service is the core backend management service of the RAGFlow system, providing comprehensive system administration capabilities through centralized API interfaces for managing and controlling the entire platform. Adopting a client-server architecture, it supports access and operations via both a Web UI and an Admin CLI, ensuring flexible and efficient execution of administrative tasks.

|

||||

@ -27,7 +25,7 @@ With its unified interface design, the Admin Service combines the convenience of

|

||||

python admin/server/admin_server.py

|

||||

```

|

||||

|

||||

The service will start and listen for incoming connections from the CLI on the configured port.

|

||||

The service will start and listen for incoming connections from the CLI on the configured port.

|

||||

|

||||

### Using docker image

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucidePalette

|

||||

}

|

||||

---

|

||||

|

||||

# Admin UI

|

||||

|

||||

The RAGFlow Admin UI is a web-based interface that provides comprehensive system status monitoring and user management capabilities.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: RagAiAgent

|

||||

}

|

||||

---

|

||||

|

||||

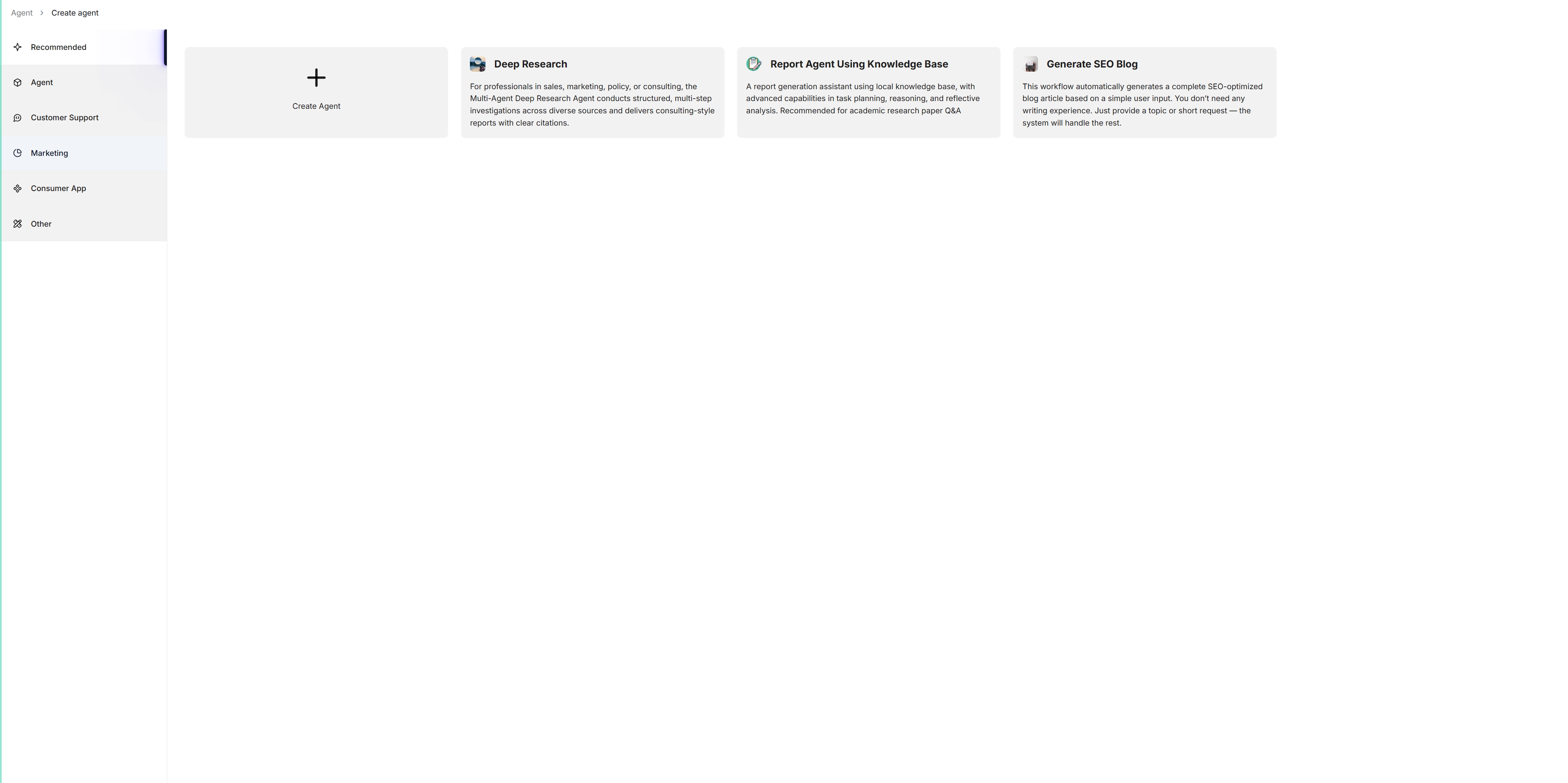

# Agent component

|

||||

|

||||

The component equipped with reasoning, tool usage, and multi-agent collaboration capabilities.

|

||||

@ -19,7 +18,7 @@ An **Agent** component fine-tunes the LLM and sets its prompt. From v0.20.5 onwa

|

||||

|

||||

## Scenarios

|

||||

|

||||

An **Agent** component is essential when you need the LLM to assist with summarizing, translating, or controlling various tasks.

|

||||

An **Agent** component is essential when you need the LLM to assist with summarizing, translating, or controlling various tasks.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

@ -31,13 +30,13 @@ An **Agent** component is essential when you need the LLM to assist with summari

|

||||

|

||||

## Quickstart

|

||||

|

||||

### 1. Click on an **Agent** component to show its configuration panel

|

||||

### 1. Click on an **Agent** component to show its configuration panel

|

||||

|

||||

The corresponding configuration panel appears to the right of the canvas. Use this panel to define and fine-tune the **Agent** component's behavior.

|

||||

|

||||

### 2. Select your model

|

||||

|

||||

Click **Model**, and select a chat model from the dropdown menu.

|

||||

Click **Model**, and select a chat model from the dropdown menu.

|

||||

|

||||

:::tip NOTE

|

||||

If no model appears, check if your have added a chat model on the **Model providers** page.

|

||||

@ -58,7 +57,7 @@ In this quickstart, we assume your **Agent** component is used standalone (witho

|

||||

|

||||

### 5. Skip Tools and Agent

|

||||

|

||||

The **+ Add tools** and **+ Add agent** sections are used *only* when you need to configure your **Agent** component as a planner (with tools or sub-Agents beneath). In this quickstart, we assume your **Agent** component is used standalone (without tools or sub-Agents beneath).

|

||||

The **+ Add tools** and **+ Add agent** sections are used *only* when you need to configure your **Agent** component as a planner (with tools or sub-Agents beneath). In this quickstart, we assume your **Agent** component is used standalone (without tools or sub-Agents beneath).

|

||||

|

||||

### 6. Choose the next component

|

||||

|

||||

@ -74,7 +73,7 @@ In this section, we assume your **Agent** will be configured as a planner, with

|

||||

|

||||

|

||||

|

||||

### 2. Configure your Tavily MCP server

|

||||

### 2. Configure your Tavily MCP server

|

||||

|

||||

Update your MCP server's name, URL (including the API key), server type, and other necessary settings. When configured correctly, the available tools will be displayed.

|

||||

|

||||

@ -113,7 +112,7 @@ On the canvas, click the newly-populated Tavily server to view and select its av

|

||||

|

||||

Click the dropdown menu of **Model** to show the model configuration window.

|

||||

|

||||

- **Model**: The chat model to use.

|

||||

- **Model**: The chat model to use.

|

||||

- Ensure you set the chat model correctly on the **Model providers** page.

|

||||

- You can use different models for different components to increase flexibility or improve overall performance.

|

||||

- **Creativity**: A shortcut to **Temperature**, **Top P**, **Presence penalty**, and **Frequency penalty** settings, indicating the freedom level of the model. From **Improvise**, **Precise**, to **Balance**, each preset configuration corresponds to a unique combination of **Temperature**, **Top P**, **Presence penalty**, and **Frequency penalty**.

|

||||

@ -121,21 +120,21 @@ Click the dropdown menu of **Model** to show the model configuration window.

|

||||

- **Improvise**: Produces more creative responses.

|

||||

- **Precise**: (Default) Produces more conservative responses.

|

||||

- **Balance**: A middle ground between **Improvise** and **Precise**.

|

||||

- **Temperature**: The randomness level of the model's output.

|

||||

- **Temperature**: The randomness level of the model's output.

|

||||

Defaults to 0.1.

|

||||

- Lower values lead to more deterministic and predictable outputs.

|

||||

- Higher values lead to more creative and varied outputs.

|

||||

- A temperature of zero results in the same output for the same prompt.

|

||||

- **Top P**: Nucleus sampling.

|

||||

- **Top P**: Nucleus sampling.

|

||||

- Reduces the likelihood of generating repetitive or unnatural text by setting a threshold *P* and restricting the sampling to tokens with a cumulative probability exceeding *P*.

|

||||

- Defaults to 0.3.

|

||||

- **Presence penalty**: Encourages the model to include a more diverse range of tokens in the response.

|

||||

- **Presence penalty**: Encourages the model to include a more diverse range of tokens in the response.

|

||||

- A higher **presence penalty** value results in the model being more likely to generate tokens not yet been included in the generated text.

|

||||

- Defaults to 0.4.

|

||||

- **Frequency penalty**: Discourages the model from repeating the same words or phrases too frequently in the generated text.

|

||||

- **Frequency penalty**: Discourages the model from repeating the same words or phrases too frequently in the generated text.

|

||||

- A higher **frequency penalty** value results in the model being more conservative in its use of repeated tokens.

|

||||

- Defaults to 0.7.

|

||||

- **Max tokens**:

|

||||

- **Max tokens**:

|

||||

This sets the maximum length of the model's output, measured in the number of tokens (words or pieces of words). It is disabled by default, allowing the model to determine the number of tokens in its responses.

|

||||

|

||||

:::tip NOTE

|

||||

@ -145,7 +144,7 @@ Click the dropdown menu of **Model** to show the model configuration window.

|

||||

|

||||

### System prompt

|

||||

|

||||

Typically, you use the system prompt to describe the task for the LLM, specify how it should respond, and outline other miscellaneous requirements. We do not plan to elaborate on this topic, as it can be as extensive as prompt engineering. However, please be aware that the system prompt is often used in conjunction with keys (variables), which serve as various data inputs for the LLM.

|

||||

Typically, you use the system prompt to describe the task for the LLM, specify how it should respond, and outline other miscellaneous requirements. We do not plan to elaborate on this topic, as it can be as extensive as prompt engineering. However, please be aware that the system prompt is often used in conjunction with keys (variables), which serve as various data inputs for the LLM.

|

||||

|

||||

An **Agent** component relies on keys (variables) to specify its data inputs. Its immediate upstream component is *not* necessarily its data input, and the arrows in the workflow indicate *only* the processing sequence. Keys in a **Agent** component are used in conjunction with the system prompt to specify data inputs for the LLM. Use a forward slash `/` or the **(x)** button to show the keys to use.

|

||||

|

||||

@ -193,11 +192,11 @@ From v0.20.5 onwards, four framework-level prompt blocks are available in the **

|

||||

The user-defined prompt. Defaults to `sys.query`, the user query. As a general rule, when using the **Agent** component as a standalone module (not as a planner), you usually need to specify the corresponding **Retrieval** component’s output variable (`formalized_content`) here as part of the input to the LLM.

|

||||

|

||||

|

||||

### Tools

|

||||

### Tools

|

||||

|

||||

You can use an **Agent** component as a collaborator that reasons and reflects with the aid of other tools; for instance, **Retrieval** can serve as one such tool for an **Agent**.

|

||||

|

||||

### Agent

|

||||

### Agent

|

||||

|

||||

You use an **Agent** component as a collaborator that reasons and reflects with the aid of subagents or other tools, forming a multi-agent system.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideMessageSquareDot

|

||||

}

|

||||

---

|

||||

|

||||

# Await response component

|

||||

|

||||

A component that halts the workflow and awaits user input.

|

||||

@ -26,7 +25,7 @@ Whether to show the message defined in the **Message** field.

|

||||

|

||||

### Message

|

||||

|

||||

The static message to send out.

|

||||

The static message to send out.

|

||||

|

||||

Click **+ Add message** to add message options. When multiple messages are supplied, the **Message** component randomly selects one to send.

|

||||

|

||||

@ -34,9 +33,9 @@ Click **+ Add message** to add message options. When multiple messages are suppl

|

||||

|

||||

You can define global variables within the **Await response** component, which can be either mandatory or optional. Once set, users will need to provide values for these variables when engaging with the agent. Click **+** to add a global variable, each with the following attributes:

|

||||

|

||||

- **Name**: _Required_

|

||||

A descriptive name providing additional details about the variable.

|

||||

- **Type**: _Required_

|

||||

- **Name**: _Required_

|

||||

A descriptive name providing additional details about the variable.

|

||||

- **Type**: _Required_

|

||||

The type of the variable:

|

||||

- **Single-line text**: Accepts a single line of text without line breaks.

|

||||

- **Paragraph text**: Accepts multiple lines of text, including line breaks.

|

||||

@ -44,7 +43,7 @@ You can define global variables within the **Await response** component, which c

|

||||

- **file upload**: Requires the user to upload one or multiple files.

|

||||

- **Number**: Accepts a number as input.

|

||||

- **Boolean**: Requires the user to toggle between on and off.

|

||||

- **Key**: _Required_

|

||||

- **Key**: _Required_

|

||||

The unique variable name.

|

||||

- **Optional**: A toggle indicating whether the variable is optional.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideHome

|

||||

}

|

||||

---

|

||||

|

||||

# Begin component

|

||||

|

||||

The starting component in a workflow.

|

||||

@ -39,9 +38,9 @@ An agent in conversational mode begins with an opening greeting. It is the agent

|

||||

|

||||

You can define global variables within the **Begin** component, which can be either mandatory or optional. Once set, users will need to provide values for these variables when engaging with the agent. Click **+ Add variable** to add a global variable, each with the following attributes:

|

||||

|

||||

- **Name**: _Required_

|

||||

A descriptive name providing additional details about the variable.

|

||||

- **Type**: _Required_

|

||||

- **Name**: _Required_

|

||||

A descriptive name providing additional details about the variable.

|

||||

- **Type**: _Required_

|

||||

The type of the variable:

|

||||

- **Single-line text**: Accepts a single line of text without line breaks.

|

||||

- **Paragraph text**: Accepts multiple lines of text, including line breaks.

|

||||

@ -49,7 +48,7 @@ You can define global variables within the **Begin** component, which can be eit

|

||||

- **file upload**: Requires the user to upload one or multiple files.

|

||||

- **Number**: Accepts a number as input.

|

||||

- **Boolean**: Requires the user to toggle between on and off.

|

||||

- **Key**: _Required_

|

||||

- **Key**: _Required_

|

||||

The unique variable name.

|

||||

- **Optional**: A toggle indicating whether the variable is optional.

|

||||

|

||||

|

||||

@ -5,10 +5,9 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideSwatchBook

|

||||

}

|

||||

---

|

||||

|

||||

# Categorize component

|

||||

|

||||

A component that classifies user inputs and applies strategies accordingly.

|

||||

A component that classifies user inputs and applies strategies accordingly.

|

||||

|

||||

---

|

||||

|

||||

@ -26,7 +25,7 @@ A **Categorize** component is essential when you need the LLM to help you identi

|

||||

|

||||

Select the source for categorization.

|

||||

|

||||

The **Categorize** component relies on query variables to specify its data inputs (queries). All global variables defined before the **Categorize** component are available in the dropdown list.

|

||||

The **Categorize** component relies on query variables to specify its data inputs (queries). All global variables defined before the **Categorize** component are available in the dropdown list.

|

||||

|

||||

|

||||

### Input

|

||||

@ -34,7 +33,7 @@ The **Categorize** component relies on query variables to specify its data input

|

||||

The **Categorize** component relies on input variables to specify its data inputs (queries). Click **+ Add variable** in the **Input** section to add the desired input variables. There are two types of input variables: **Reference** and **Text**.

|

||||

|

||||

- **Reference**: Uses a component's output or a user input as the data source. You are required to select from the dropdown menu:

|

||||

- A component ID under **Component Output**, or

|

||||

- A component ID under **Component Output**, or

|

||||

- A global variable under **Begin input**, which is defined in the **Begin** component.

|

||||

- **Text**: Uses fixed text as the query. You are required to enter static text.

|

||||

|

||||

@ -42,29 +41,29 @@ The **Categorize** component relies on input variables to specify its data input

|

||||

|

||||

Click the dropdown menu of **Model** to show the model configuration window.

|

||||

|

||||

- **Model**: The chat model to use.

|

||||

- **Model**: The chat model to use.

|

||||

- Ensure you set the chat model correctly on the **Model providers** page.

|

||||

- You can use different models for different components to increase flexibility or improve overall performance.

|

||||

- **Creativity**: A shortcut to **Temperature**, **Top P**, **Presence penalty**, and **Frequency penalty** settings, indicating the freedom level of the model. From **Improvise**, **Precise**, to **Balance**, each preset configuration corresponds to a unique combination of **Temperature**, **Top P**, **Presence penalty**, and **Frequency penalty**.

|

||||

This parameter has three options:

|

||||

This parameter has three options:

|

||||

- **Improvise**: Produces more creative responses.

|

||||

- **Precise**: (Default) Produces more conservative responses.

|

||||

- **Balance**: A middle ground between **Improvise** and **Precise**.

|

||||

- **Temperature**: The randomness level of the model's output.

|

||||

Defaults to 0.1.

|

||||

- **Temperature**: The randomness level of the model's output.

|

||||

Defaults to 0.1.

|

||||

- Lower values lead to more deterministic and predictable outputs.

|

||||

- Higher values lead to more creative and varied outputs.

|

||||

- A temperature of zero results in the same output for the same prompt.

|

||||

- **Top P**: Nucleus sampling.

|

||||

- **Top P**: Nucleus sampling.

|

||||

- Reduces the likelihood of generating repetitive or unnatural text by setting a threshold *P* and restricting the sampling to tokens with a cumulative probability exceeding *P*.

|

||||

- Defaults to 0.3.

|

||||

- **Presence penalty**: Encourages the model to include a more diverse range of tokens in the response.

|

||||

- **Presence penalty**: Encourages the model to include a more diverse range of tokens in the response.

|

||||

- A higher **presence penalty** value results in the model being more likely to generate tokens not yet been included in the generated text.

|

||||

- Defaults to 0.4.

|

||||

- **Frequency penalty**: Discourages the model from repeating the same words or phrases too frequently in the generated text.

|

||||

- **Frequency penalty**: Discourages the model from repeating the same words or phrases too frequently in the generated text.

|

||||

- A higher **frequency penalty** value results in the model being more conservative in its use of repeated tokens.

|

||||

- Defaults to 0.7.

|

||||

- **Max tokens**:

|

||||

- **Max tokens**:

|

||||

This sets the maximum length of the model's output, measured in the number of tokens (words or pieces of words). It is disabled by default, allowing the model to determine the number of tokens in its responses.

|

||||

|

||||

:::tip NOTE

|

||||

@ -84,7 +83,7 @@ This feature is used for multi-turn dialogue *only*. If your **Categorize** comp

|

||||

|

||||

### Category name

|

||||

|

||||

A **Categorize** component must have at least two categories. This field sets the name of the category. Click **+ Add Item** to include the intended categories.

|

||||

A **Categorize** component must have at least two categories. This field sets the name of the category. Click **+ Add Item** to include the intended categories.

|

||||

|

||||

:::tip NOTE

|

||||

You will notice that the category name is auto-populated. No worries. Each category is assigned a random name upon creation. Feel free to change it to a name that is understandable to the LLM.

|

||||

@ -92,7 +91,7 @@ You will notice that the category name is auto-populated. No worries. Each categ

|

||||

|

||||

#### Description

|

||||

|

||||

Description of this category.

|

||||

Description of this category.

|

||||

|

||||

You can input criteria, situation, or information that may help the LLM determine which inputs belong in this category.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideBlocks

|

||||

}

|

||||

---

|

||||

|

||||

# Title chunker component

|

||||

|

||||

A component that splits texts into chunks by heading level.

|

||||

@ -26,7 +25,7 @@ Placing a **Title chunker** after a **Token chunker** is invalid and will cause

|

||||

|

||||

### Hierarchy

|

||||

|

||||

Specifies the heading level to define chunk boundaries:

|

||||

Specifies the heading level to define chunk boundaries:

|

||||

|

||||

- H1

|

||||

- H2

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideBlocks

|

||||

}

|

||||

---

|

||||

|

||||

# Token chunker component

|

||||

|

||||

A component that splits texts into chunks, respecting a maximum token limit and using delimiters to find optimal breakpoints.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideCodeXml

|

||||

}

|

||||

---

|

||||

|

||||

# Code component

|

||||

|

||||

A component that enables users to integrate Python or JavaScript codes into their Agent for dynamic data processing.

|

||||

@ -36,7 +35,7 @@ If your RAGFlow Sandbox is not working, please be sure to consult the [Troublesh

|

||||

|

||||

### 3. (Optional) Install necessary dependencies

|

||||

|

||||

If you need to import your own Python or JavaScript packages into Sandbox, please follow the commands provided in the [How to import my own Python or JavaScript packages into Sandbox?](#how-to-import-my-own-python-or-javascript-packages-into-sandbox) section to install the additional dependencies.

|

||||

If you need to import your own Python or JavaScript packages into Sandbox, please follow the commands provided in the [How to import my own Python or JavaScript packages into Sandbox?](#how-to-import-my-own-python-or-javascript-packages-into-sandbox) section to install the additional dependencies.

|

||||

|

||||

### 4. Enable Sandbox-specific settings in RAGFlow

|

||||

|

||||

@ -46,11 +45,11 @@ Ensure all Sandbox-specific settings are enabled in **ragflow/docker/.env**.

|

||||

|

||||

Any changes to the configuration or environment *require* a full service restart to take effect.

|

||||

|

||||

## Configurations

|

||||

## Configurations

|

||||

|

||||

### Input

|

||||

|

||||

You can specify multiple input sources for the **Code** component. Click **+ Add variable** in the **Input variables** section to include the desired input variables.

|

||||

You can specify multiple input sources for the **Code** component. Click **+ Add variable** in the **Input variables** section to include the desired input variables.

|

||||

|

||||

### Code

|

||||

|

||||

@ -62,7 +61,7 @@ If your code implementation includes defined variables, whether input or output

|

||||

|

||||

#### A Python code example

|

||||

|

||||

```Python

|

||||

```Python

|

||||

def main(arg1: str, arg2: str) -> dict:

|

||||

return {

|

||||

"result": arg1 + arg2,

|

||||

@ -105,7 +104,7 @@ The defined output variable(s) will be auto-populated here.

|

||||

|

||||

### `HTTPConnectionPool(host='sandbox-executor-manager', port=9385): Read timed out.`

|

||||

|

||||

**Root cause**

|

||||

**Root cause**

|

||||

|

||||

- You did not properly install gVisor and `runsc` was not recognized as a valid Docker runtime.

|

||||

- You did not pull the required base images for the runners and no runner was started.

|

||||

@ -147,11 +146,11 @@ docker build -t sandbox-executor-manager:latest ./sandbox/executor_manager

|

||||

|

||||

### `HTTPConnectionPool(host='none', port=9385): Max retries exceeded.`

|

||||

|

||||

**Root cause**

|

||||

**Root cause**

|

||||

|

||||

`sandbox-executor-manager` is not mapped in `/etc/hosts`.

|

||||

|

||||

**Solution**

|

||||

**Solution**

|

||||

|

||||

Add a new entry to `/etc/hosts`:

|

||||

|

||||

@ -159,11 +158,11 @@ Add a new entry to `/etc/hosts`:

|

||||

|

||||

### `Container pool is busy`

|

||||

|

||||

**Root cause**

|

||||

**Root cause**

|

||||

|

||||

All runners are currently in use, executing tasks.

|

||||

All runners are currently in use, executing tasks.

|

||||

|

||||

**Solution**

|

||||

**Solution**

|

||||

|

||||

Please try again shortly or increase the pool size in the configuration to improve availability and reduce waiting times.

|

||||

|

||||

@ -208,7 +207,7 @@ To import your JavaScript packages, navigate to `sandbox_base_image/nodejs` and

|

||||

|

||||

(ragflow) ➜ ragflow/sandbox main ✓ cd sandbox_base_image/nodejs

|

||||

|

||||

(ragflow) ➜ ragflow/sandbox/sandbox_base_image/nodejs main ✓ npm install lodash

|

||||

(ragflow) ➜ ragflow/sandbox/sandbox_base_image/nodejs main ✓ npm install lodash

|

||||

|

||||

(ragflow) ➜ ragflow/sandbox/sandbox_base_image/nodejs main ✓ cd ../.. # go back to sandbox root directory

|

||||

|

||||

|

||||

@ -5,14 +5,13 @@ sidebar_custom_props: {

|

||||

categoryIcon: RagSql

|

||||

}

|

||||

---

|

||||

|

||||

# Execute SQL tool

|

||||

|

||||

A tool that execute SQL queries on a specified relational database.

|

||||

|

||||

---

|

||||

|

||||

The **Execute SQL** tool enables you to connect to a relational database and run SQL queries, whether entered directly or generated by the system’s Text2SQL capability via an **Agent** component.

|

||||

The **Execute SQL** tool enables you to connect to a relational database and run SQL queries, whether entered directly or generated by the system’s Text2SQL capability via an **Agent** component.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

|

||||

@ -5,10 +5,9 @@ sidebar_custom_props: {

|

||||

categoryIcon: RagHTTP

|

||||

}

|

||||

---

|

||||

|

||||

# HTTP request component

|

||||

|

||||

A component that calls remote services.

|

||||

A component that calls remote services.

|

||||

|

||||

---

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideListPlus

|

||||

}

|

||||

---

|

||||

|

||||

# Indexer component

|

||||

|

||||

A component that defines how chunks are indexed.

|

||||

|

||||

@ -5,19 +5,18 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideRepeat2

|

||||

}

|

||||

---

|

||||

|

||||

# Iteration component

|

||||

|

||||

A component that splits text input into text segments and iterates a predefined workflow for each one.

|

||||

|

||||

---

|

||||

|

||||

An **Interaction** component can divide text input into text segments and apply its built-in component workflow to each segment.

|

||||

An **Interaction** component can divide text input into text segments and apply its built-in component workflow to each segment.

|

||||

|

||||

|

||||

## Scenario

|

||||

|

||||

An **Iteration** component is essential when a workflow loop is required and the loop count is *not* fixed but depends on number of segments created from the output of specific agent components.

|

||||

An **Iteration** component is essential when a workflow loop is required and the loop count is *not* fixed but depends on number of segments created from the output of specific agent components.

|

||||

|

||||

- If, for instance, you plan to feed several paragraphs into an LLM for content generation, each with its own focus, and feeding them to the LLM all at once could create confusion or contradictions, then you can use an **Iteration** component, which encapsulates a **Generate** component, to repeat the content generation process for each paragraph.

|

||||

- Another example: If you wish to use the LLM to translate a lengthy paper into a target language without exceeding its token limit, consider using an **Iteration** component, which encapsulates a **Generate** component, to break the paper into smaller pieces and repeat the translation process for each one.

|

||||

@ -32,12 +31,12 @@ Each **Iteration** component includes an internal **IterationItem** component. T

|

||||

The **IterationItem** component is visible *only* to the components encapsulated by the current **Iteration** components.

|

||||

:::

|

||||

|

||||

### Build an internal workflow

|

||||

### Build an internal workflow

|

||||

|

||||

You are allowed to pull other components into the **Iteration** component to build an internal workflow, and these "added internal components" are no longer visible to components outside of the current **Iteration** component.

|

||||

|

||||

:::danger IMPORTANT

|

||||

To reference the created text segments from an added internal component, simply add a **Reference** variable that equals **IterationItem** within the **Input** section of that internal component. There is no need to reference the corresponding external component, as the **IterationItem** component manages the loop of the workflow for all created text segments.

|

||||

To reference the created text segments from an added internal component, simply add a **Reference** variable that equals **IterationItem** within the **Input** section of that internal component. There is no need to reference the corresponding external component, as the **IterationItem** component manages the loop of the workflow for all created text segments.

|

||||

:::

|

||||

|

||||

:::tip NOTE

|

||||

@ -51,7 +50,7 @@ An added internal component can reference an external component when necessary.

|

||||

The **Iteration** component uses input variables to specify its data inputs, namely the texts to be segmented. You are allowed to specify multiple input sources for the **Iteration** component. Click **+ Add variable** in the **Input** section to include the desired input variables. There are two types of input variables: **Reference** and **Text**.

|

||||

|

||||

- **Reference**: Uses a component's output or a user input as the data source. You are required to select from the dropdown menu:

|

||||

- A component ID under **Component Output**, or

|

||||

- A component ID under **Component Output**, or

|

||||

- A global variable under **Begin input**, which is defined in the **Begin** component.

|

||||

- **Text**: Uses fixed text as the query. You are required to enter static text.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideMessageSquareReply

|

||||

}

|

||||

---

|

||||

|

||||

# Message component

|

||||

|

||||

A component that sends out a static or dynamic message.

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideFilePlay

|

||||

}

|

||||

---

|

||||

|

||||

# Parser component

|

||||

|

||||

A component that sets the parsing rules for your dataset.

|

||||

@ -57,12 +56,12 @@ Starting from v0.22.0, RAGFlow includes MinerU (≥ 2.6.3) as an optional PDF p

|

||||

- `"vlm-mlx-engine"`

|

||||

- `"vlm-vllm-async-engine"`

|

||||

- `"vlm-lmdeploy-engine"`.

|

||||

- `MINERU_SERVER_URL`: (optional) The downstream vLLM HTTP server (e.g., `http://vllm-host:30000`). Applicable when `MINERU_BACKEND` is set to `"vlm-http-client"`.

|

||||

- `MINERU_SERVER_URL`: (optional) The downstream vLLM HTTP server (e.g., `http://vllm-host:30000`). Applicable when `MINERU_BACKEND` is set to `"vlm-http-client"`.

|

||||

- `MINERU_OUTPUT_DIR`: (optional) The local directory for holding the outputs of the MinerU API service (zip/JSON) before ingestion.

|

||||

- `MINERU_DELETE_OUTPUT`: Whether to delete temporary output when a temporary directory is used:

|

||||

- `1`: Delete.

|

||||

- `0`: Retain.

|

||||

3. In the web UI, navigate to your dataset's **Configuration** page and find the **Ingestion pipeline** section:

|

||||

3. In the web UI, navigate to your dataset's **Configuration** page and find the **Ingestion pipeline** section:

|

||||

- If you decide to use a chunking method from the **Built-in** dropdown, ensure it supports PDF parsing, then select **MinerU** from the **PDF parser** dropdown.

|

||||

- If you use a custom ingestion pipeline instead, select **MinerU** in the **PDF parser** section of the **Parser** component.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideFolderSearch

|

||||

}

|

||||

---

|

||||

|

||||

# Retrieval component

|

||||

|

||||

A component that retrieves information from specified datasets.

|

||||

@ -24,13 +23,13 @@ Ensure you [have properly configured your target dataset(s)](../../dataset/confi

|

||||

|

||||

## Quickstart

|

||||

|

||||

### 1. Click on a **Retrieval** component to show its configuration panel

|

||||

### 1. Click on a **Retrieval** component to show its configuration panel

|

||||

|

||||

The corresponding configuration panel appears to the right of the canvas. Use this panel to define and fine-tune the **Retrieval** component's search behavior.

|

||||

|

||||

### 2. Input query variable(s)

|

||||

|

||||

The **Retrieval** component depends on query variables to specify its queries.

|

||||

The **Retrieval** component depends on query variables to specify its queries.

|

||||

|

||||

:::caution IMPORTANT

|

||||

- If you use the **Retrieval** component as a standalone workflow module, input query variables in the **Input Variables** text box.

|

||||

@ -77,7 +76,7 @@ Select the query source for retrieval. Defaults to `sys.query`, which is the def

|

||||

|

||||

The **Retrieval** component relies on query variables to specify its queries. All global variables defined before the **Retrieval** component can also be used as queries. Use the `(x)` button or type `/` to show all the available query variables.

|

||||

|

||||

### Knowledge bases

|

||||

### Knowledge bases

|

||||

|

||||

Select the dataset(s) to retrieve data from.

|

||||

|

||||

@ -113,7 +112,7 @@ Using a rerank model will *significantly* increase the system's response time.

|

||||

|

||||

### Empty response

|

||||

|

||||

- Set this as a response if no results are retrieved from the dataset(s) for your query, or

|

||||

- Set this as a response if no results are retrieved from the dataset(s) for your query, or

|

||||

- Leave this field blank to allow the chat model to improvise when nothing is found.

|

||||

|

||||

:::caution WARNING

|

||||

|

||||

@ -5,10 +5,9 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideSplit

|

||||

}

|

||||

---

|

||||

|

||||

# Switch component

|

||||

|

||||

A component that evaluates whether specified conditions are met and directs the follow of execution accordingly.

|

||||

A component that evaluates whether specified conditions are met and directs the follow of execution accordingly.

|

||||

|

||||

---

|

||||

|

||||

@ -16,7 +15,7 @@ A **Switch** component evaluates conditions based on the output of specific comp

|

||||

|

||||

## Scenarios

|

||||

|

||||

A **Switch** component is essential for condition-based direction of execution flow. While it shares similarities with the [Categorize](./categorize.mdx) component, which is also used in multi-pronged strategies, the key distinction lies in their approach: the evaluation of the **Switch** component is rule-based, whereas the **Categorize** component involves AI and uses an LLM for decision-making.

|

||||

A **Switch** component is essential for condition-based direction of execution flow. While it shares similarities with the [Categorize](./categorize.mdx) component, which is also used in multi-pronged strategies, the key distinction lies in their approach: the evaluation of the **Switch** component is rule-based, whereas the **Categorize** component involves AI and uses an LLM for decision-making.

|

||||

|

||||

## Configurations

|

||||

|

||||

@ -42,12 +41,12 @@ When you have added multiple conditions for a specific case, a **Logical operato

|

||||

- Greater equal

|

||||

- Less than

|

||||

- Less equal

|

||||

- Contains

|

||||

- Not contains

|

||||

- Contains

|

||||

- Not contains

|

||||

- Starts with

|

||||

- Ends with

|

||||

- Is empty

|

||||

- Not empty

|

||||

- **Value**: A single value, which can be an integer, float, or string.

|

||||

- **Value**: A single value, which can be an integer, float, or string.

|

||||

- Delimiters, multiple values, or expressions are *not* supported.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideType

|

||||

}

|

||||

---

|

||||

|

||||

# Text processing component

|

||||

|

||||

A component that merges or splits texts.

|

||||

@ -27,7 +26,7 @@ Appears only when you select **Split** as method.

|

||||

|

||||

The variable to be split. Type `/` to quickly insert variables.

|

||||

|

||||

### Script

|

||||

### Script

|

||||

|

||||

Template for the merge. Appears only when you select **Merge** as method. Type `/` to quickly insert variables.

|

||||

|

||||

|

||||

@ -5,7 +5,6 @@ sidebar_custom_props: {

|

||||

categoryIcon: LucideFileStack

|

||||

}

|

||||

---

|

||||

|

||||

# Transformer component

|

||||

|

||||

A component that uses an LLM to extract insights from the chunks.

|

||||

@ -16,7 +15,7 @@ A **Transformer** component indexes chunks and configures their storage formats

|

||||